15-Mar-2013: BLAST 2.7.1 at SV-COMP'2013 and LDV experiments

15-Mar-2013: BLAST 2.7.1 at SV-COMP'2013 and LDV experiments

BLAST 2.7.1 was presented at the 2nd Software Verification Competition 2013 that took place at TACAS'13. This year BLAST won a Bronze in the DeviceDrivers64 category. The second place is taken by CPAchecker which is already integrated into Linux Driver Verification toolset. The winner of DeviceDrivers64 is a new tool named UFO.

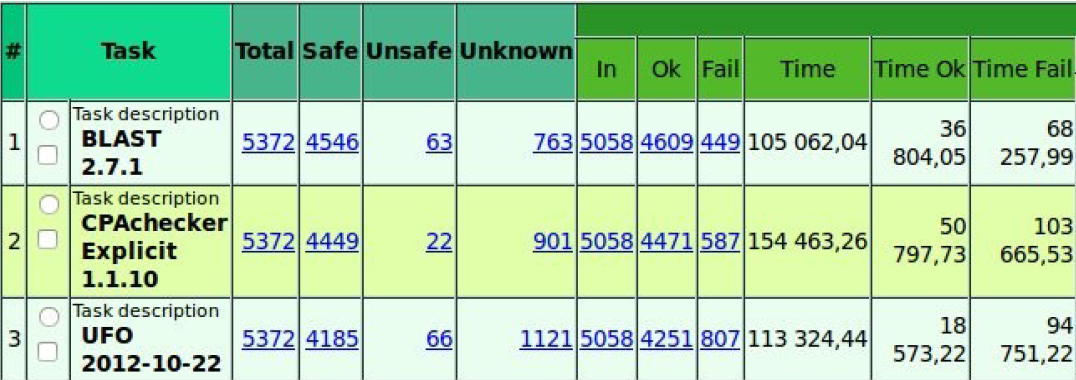

As soon as we look at the competition results from the perspective of the Linux Driver Verification project, we conducted a small experiment by running competition versions of BLAST, CPAchecker and UFO on about 5 thousand drivers from Linux kernel 3.8-rc1 to check one safety rule.

The results have shown that CPAchecker misses many UNSAFEs that means it misses many bugs in practice. We reported the issues to CPAchecker developers and took part in fixing them. As a result, development version of CPAchecker is much better for Linux device driver verification in comparison with the competition one.

Analysis of UFO results has shown that there are quite a number of missed SAFE verdicts (>400). But the biggest issue we faced with UFO is a problem with analyzing error traces for UNSAFE verdicts, since UFO produces a fragmentary description of error traces only.

In general, if an user cannot understand an error trace it means that the result is the same as UNKNOWN for him. From the user perspective we think that the competition rules should force tool developers to produce an understandable error traces. So the user which takes good tools according to competition results will not have such problems.

At the SV-COMP we had a number of meetings with UFO developers discussing this issue that comes from a number of code transformations inside of the UFO tool. But hopefully UFO will provide usable error traces soon.

Another observation is regarding time required for verification. Our run on drivers from Linux kernel 3.8-rc1 has shown that time needed for the OK results (SAFE or UNSAFE) may be quite small while the time for UNKNOWN results may be quite big (>3x). At the competition if the total number of points are the same for some tools, then they are compared by the time for correct results (SAFE or UNSAFE) and the time for UNKNOWN verdicts is ignored, but a user of verification tool waits for total time, so we think that the UNKNOWN time should be appreciated as well.

As a result of the experiment we proposed a couple of improvements for the competition rules that should make results more valuable for end users of verification tools.

- We found that it is important to have an understandable error traces. Preferably there are should be a common format for the competition tools which can be easily analyzed.

- Time for UNKNOWNs should be considered. At least it should be shown to the user in the summary results table.