29-Apr-2014: Linux Driver Verification is presented at Dagstuhl Seminar

29-Apr-2014: Linux Driver Verification is presented at Dagstuhl Seminar

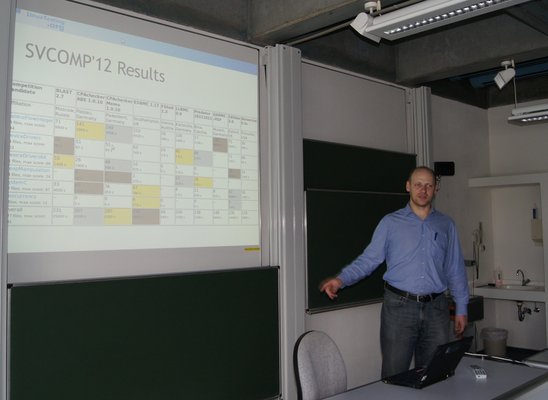

Linux Driver Verification Team members, Alexey Khoroshilov and Vadim Mutilin, took part in the Dagstuhl Seminar on Evaluating Software Verification Systems: Benchmarks and Competitions at April 21–25, 2014. The seminar was organized by Dirk Beyer (Universität Passau), Marieke Huisman (University of Twente), Vladimir Klebanov (Karlsruher Institut für Technologie) and Rosemary Monahan (NUI Maynooth).

In the talk "The Experience of Linux Driver Verification" we presented experience of the Linux Verification Center in verification of Linux device drivers. For that purpose we use software model checkers which solve reachability problems, i.e. they prove that labeled error locations in the program can not be reached by any execution starting from an entry point. But Linux device drivers have neither entry points no error locations. That is why the driver needs to be prepared for the verification.

The driver is essentially asynchronous. On driver loading, the kernel core invokes the initialization function of the driver which registers callback functions. Then these functions are called by the kernel core on receiving events from user space and from hardware. So for the verification we need to prepare an environment model with explicit calls of driver callback functions. The environment model should reproduce the same scenarios of interactions with the driver as in the real kernel and at the same time it should be simple enough to be analyzed by existing software model checker tools. For that purpose we proposed a method of environment modeling based on pi-calculus where the environment is represented as a set of communicating processes interacting with the driver via messages. From the pi-model we generate a C program which being combined with the driver becomes valid input for software model checkers with an entry point emulating all feasible paths in driver's code.

But this code still have no labeled error locations representing possible erroneous behaviour of the driver. So the second task is to prepare a specification on driver-kernel interfaces to be checked by software model checkers. The specification is weaved into the driver source code with the help of C Instrumentation Framework (CIF) and it becomes a source of labeled error locations.

In the talk we discussed the architecture of Linux Driver Verification Tools (LDV Tools) and showed its analytical and error trace visualization features. The comparison of CPAchecker with BLAST on the Linux drivers showed that while the total number of found bugs is the same the tools find different bugs. Thus it is worth to use the tools together. For now, LDV Tools helped to find more than 150 bugs which were approved and fixed in different versions of the Linux kernel.

In the talk we discussed lessons learnt. We found that it is important that the software model checker supports a full set of language features and could parse it. Moreover, the tool should not fail if it does not support some feature. If it cannot prove correctness it still may, for example, continue searching of unsafe trace if possible. We found that for software model checkers it is even more important to ignore thousands irrelevant transitions than efficiently handle relevant ones. Also, the engineering efforts can help to get significantly better results and we shared experience in speeding up BLAST from 8 times on small-sized drivers and to 30 times on medium-sized drivers.

On the base of the lessons learnt we made several conclusions how to improve the International Competition on Software Verification (SV-COMP). First of all, there are two different use cases for software model checkers. The first one targets to prove correctness of code under analysis regarding some properties, the second one targets to find as much bugs as possible. Currently, rules and a scoring scheme of SV-COMP evaluate tools from the first point of view, while it would be useful to evaluate the tools from the second point of view as well.

Another conclusion is that benchmarks we produced so far target a current generation of software model checkers and these benchmarks are not good to evaluate next generation tools. But for verification of device drivers we need much more features, like support of pointer analysis, specifications in terms of sets and maps, verification of parallel programs, data race detection, function pointers analysis, etc. Thus we should produce benchmarks which target these new features, not only existing ones.

Effective analysis of error traces produced by software model checkers in case of specification violation is crucial for the industrial use. At least, it should be possible to analyze error traces without knowing implementation details of the software model checker. A common representation of error traces required by the competition rules would ease usage of tools for driver verification, since error traces from different tools could be converted in the same way for uniform visualization and analysis with the help of LDV Tools.

Users wait for verification results in terms of wall time, not in terms of CPU time. Evaluation of verification time as CPU time in the competition does not encourage the developers to utilize available resources for parallelization. For example, the tools may use several CPU cores available on computers instead of using the single one, thus reducing wall time. It would be good if competition rules would encourage to reduce wall time of verification as well.